Overview

So I've been following the draft for a good few years now, and every year there are a few outlets that post their rankings, and people always loose their minds. Player must now be good bc Bob ranked him 7th, or that player isn't ranked by Pronaman or Wheeler, so he's never gonna be anything. SO I wanted to look at how accurate are those rankings.

Now the metric I used to grade the outlets is DY+3-DY+5 scoring, because I only looked at rankings since 2020 because that is when every outlet posted their rankings. I could have gone back way further with guys like Bob McKenzie or Scott Wheeler, but wanted to keep it consistent for all of the outlets. I would have prefered to use only DY+5 because I feel like that is the point where you can generally tell what a player is going to be. Obviously the predictive or retroactive rankings are never going to be perfect, even after every player in a class has retired people will still argue who should have went first in a redraft. I do feel like after the DY+5 is the first time where you can generally say with confidence a tier most players will be in, NHLer, bust, star, etc and usually that will hold for the rest of their career. Now for this I obviously needed a concrete ordering, not just tiers so I used their DY+5 league adjusted scoring as the ranking system. Obviously that is not a perfect target, because we are looking at how these scouts used their eyes to predict a player's points in a few years basically. They are (hopefully) looking beyond points and scoring in their DY to predict who will make the best NHLers, then I am basically resetting the "target" to be the best scorers a few years later. Now I do think it is acceptable to use scoring here, because the variance in outcomes of a guy who scores at a 0.5 league adjusted PPG rate at 23 is far smaller than the same scoring rate at 18, during their DY, and again, it is the best stat we have for ranking all of these players.

To actually grade the drafts, I didn't just look at the difference between their ranking and the DY+5 ranking, I also adjusted for pick value by creating a regression model to predict the outcome of a player given where they were picked. Otherwise, ranking the 57th best player 64th would have a much greater negative impact then ranking the best player 3rd. Obviously one of those mistakes is far worse, and it's not the one in the late 2nd. If a GM took the best player possible on every single first round pick, he could spend his 2nd-7ths on Taro Tsujimoto every single year and still probably feel comfortable in a job. But after creating the expected draft value model, I basically just took the difference between the pick values for where each outlet had that player ranked and where they were ranked after their DY+5. I then took the RMSE for that (so the difference in pick values was the error, I squared that for every player, then took the mean, and the square root of that) for each outlet. I also did the same for 2020-21 DY+4 and 2020-22 DY+3 rankings, because I had the data, even though I don't trust DY+3/4 rankings as much as I do DY+5.

Results

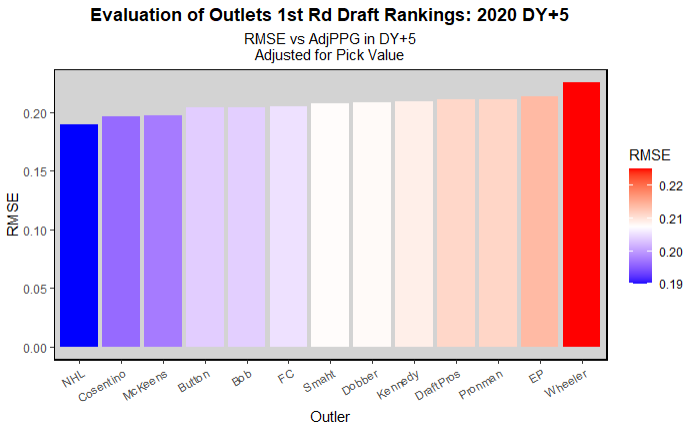

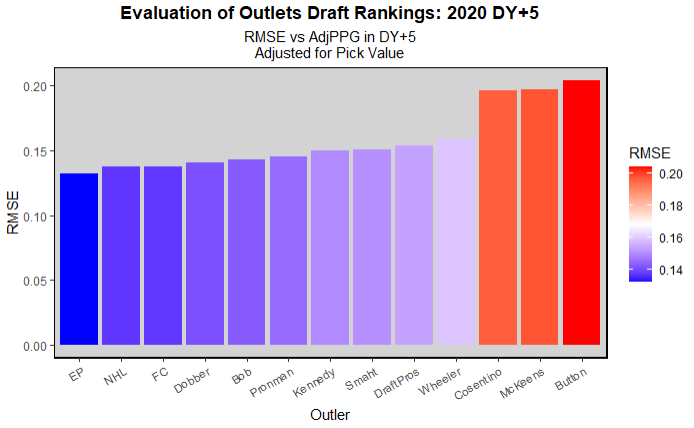

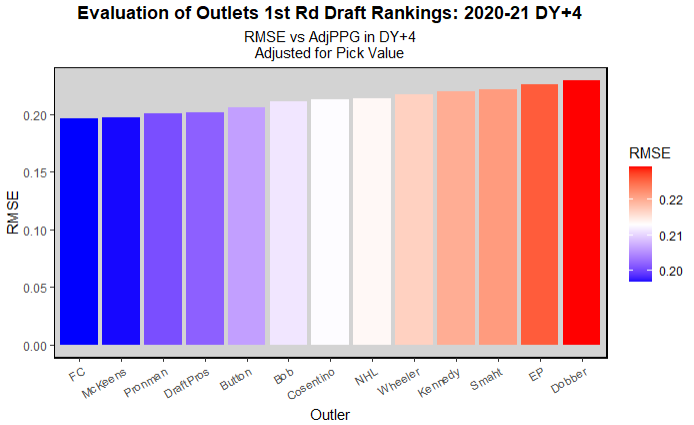

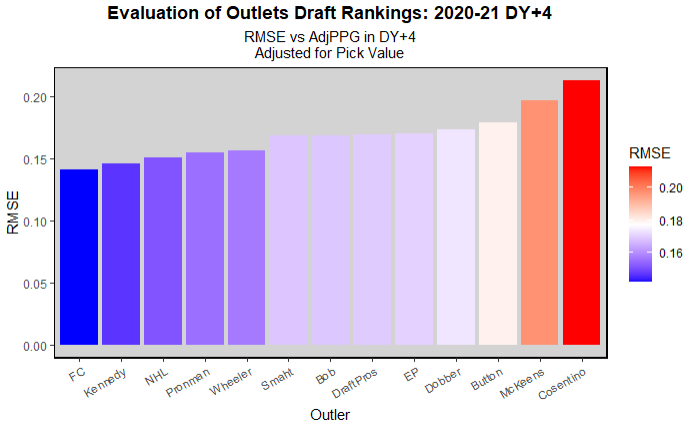

For each of the rankings, I looked at both the first round, and overall rankings. The first round is by far the most important round of the draft, really even just the top 15 is significantly more important than the rest of the draft. But also, if you can predict who is more likely to be a steal later in the draft with consistency, that has great value too. However, when looking at the full rankings, whichever outlets ranked more players would almost always have a lower RMSE because the difference in value between 50 and 60 is so small, and it is relatively rare that a guy ranked 50th by any outlet will be good enough to go top 5-10 in a redraft and hurt the outlet who ranked them 50th worse than missing on any other top 15 pick.

In 2020, the actual first round draft order did a better job at predicting future success than any outlets, however I don't necessarily give them a ton of credit for that, and wouldn't start using draft position or team boards as a major factor in a discussion about a prospect. There are 32 different team boards, and each of them could have thought every other team was taking a massive reach and they got the steal of the draft. Also, the overall EP ranking was better than the NHL draft, despite their first round being poor.

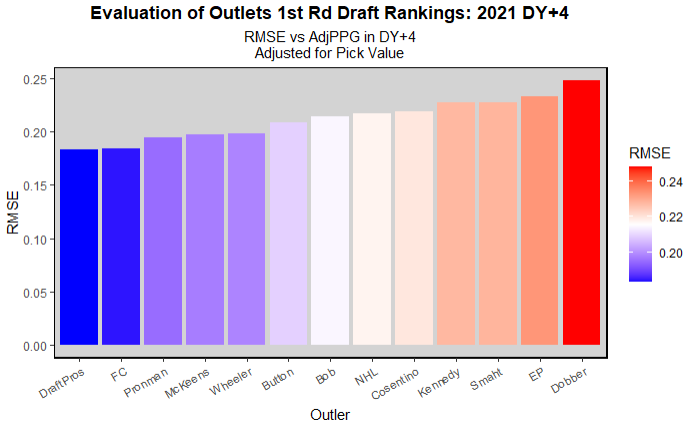

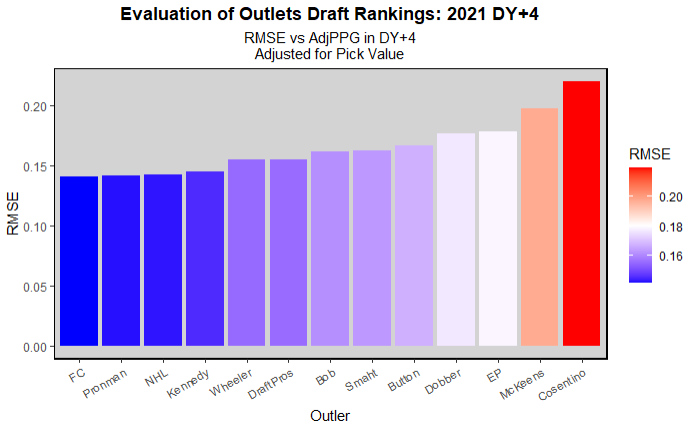

Moving on to DY+4 ranks, I looked at both 2020-21 ranks, and just 2021 ranks to see how much it changes per year compared to just the 2020 ranks. It changed a good bit, EP was still near the bottom for first rounders, but the NHL was also in the back half, and Scott Wheeler went from last to the top half. Then for the 2020-21 combined rankings, you see 3 of the public scouting conglomeration organizations near at the bottom for first rounders, but then you see FC and McKeen's at the top, and the NHL draft order in the middle, so I don't think looking at the style of who makes the rankings (an organization, individual, or the league) really means all that much.

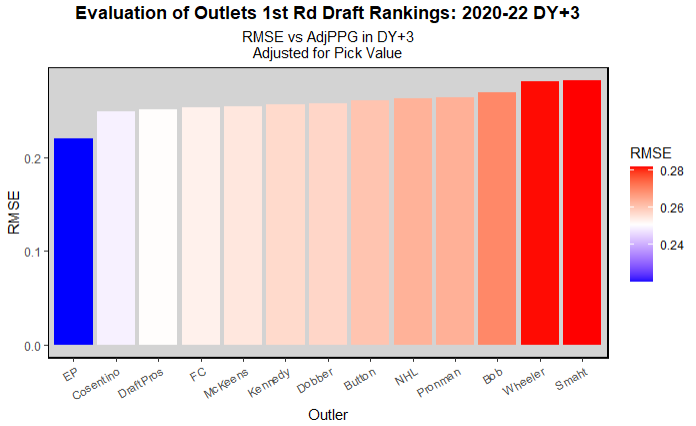

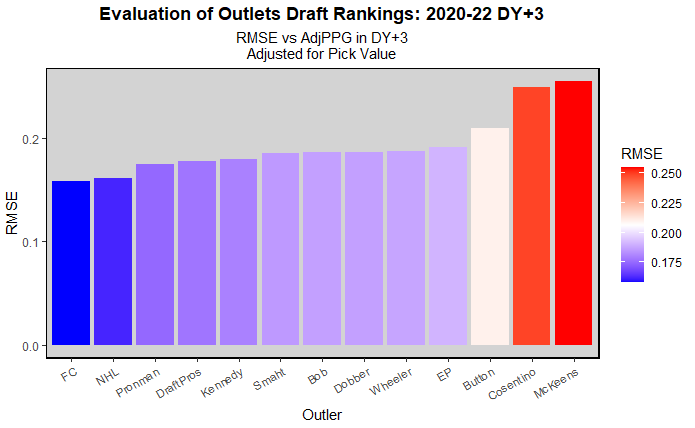

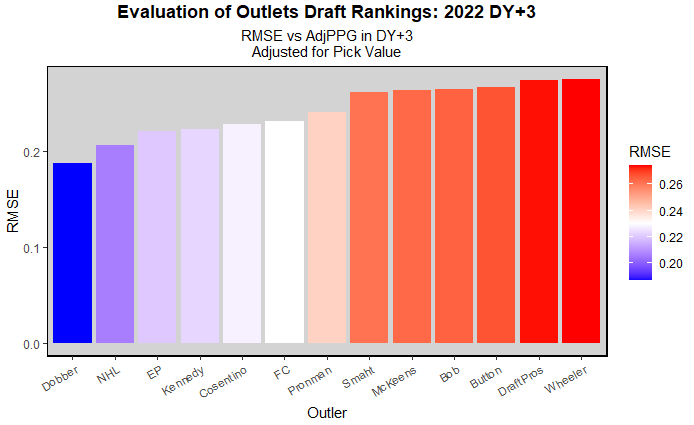

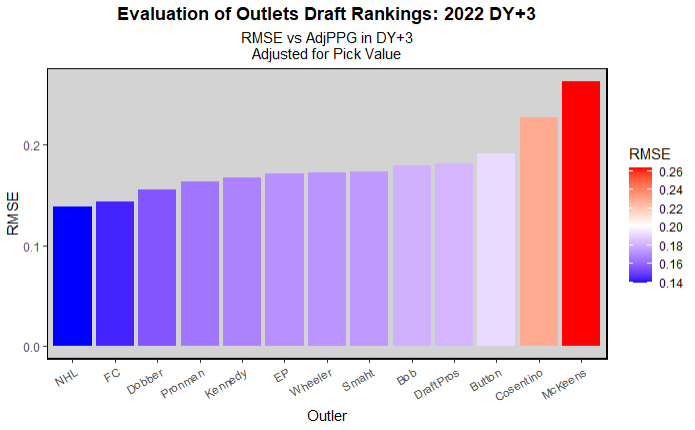

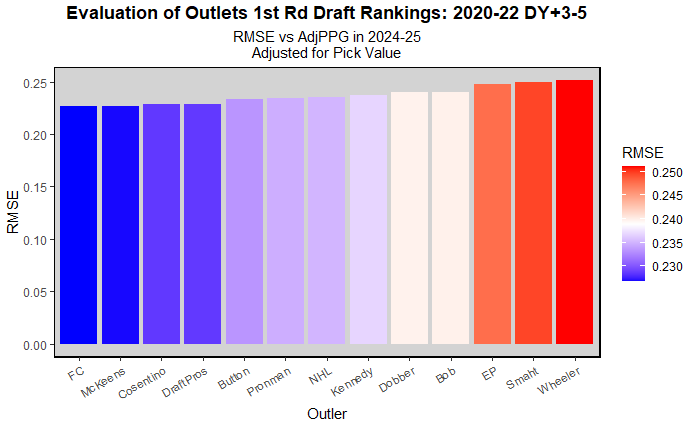

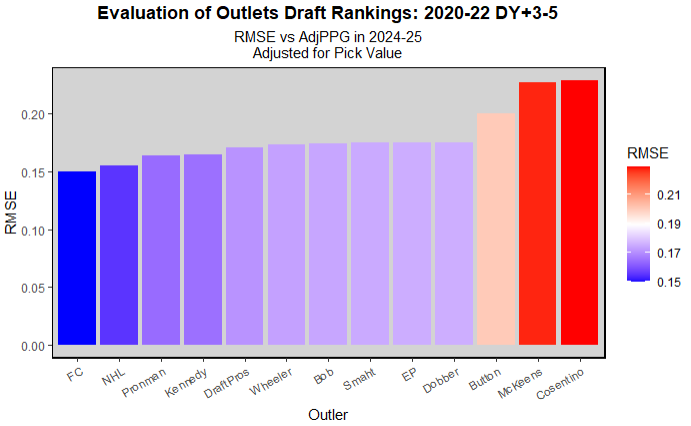

Moving on to DY+3 rankings, I did the same thing looking at all of the DY+3 rankings between 2020 and 2022, and the 2022 rankings by themselves. These ranks were basically flipped from the 2021 ones, Dobber and EP crushed it, and everyone else just kinda floated around in the middle. Obviously this is a small sample size using questionable data for some of it, but this just tells me that it is incredibly hard to consistently beat the average outcomes for draft slots. To prove that, I looked at the rankings for each of the 2020-22 classes using the most current information we have, (DY+5 ranks for 2020, DY+3 for 2022) and got these results:

Looking at this, it is clear that there is almost no difference between the best and worst public evaluators, at least among those with big enough followings for me to track them. Maybe if I looked at outlets or individuals with fewer followers they would be way worse (or maybe way better), but I feel like there is such a large amount of groupthink in public draft discussion I doubt anybody would stand out that much. The difference between Wheeler and FC is about the same difference between the 9th and 10th picks, but that gap would only shrink if I included more seasons of data I think. And also that doesn't mean the difference between a hypothtical "team FC pick" and "team Wheeler pick" would be the difference between 9th and 10th overall, that means that if FC picked the entire first round based on their board, and Wheeler picked the entire first round based on his board, the difference in value they would get would be that of the 9th and 10th overall pick. Sort of, obviously that is an absurd scenario, no team has all 32 1sts or anything, and the draft strategy would be different, and they would pick a lot of the same players. It is more just the information gain from FC is more accurate than that of Wheeler, but how valuable that actually is, especially with how much variance there is, is highly questionable. Also, as with all the others, the overall ranking is heavily skewed towards outlets that rank more players, so me posting it here probably isn't a great idea, I wouldn't really look into it at all.

Also, one more wrench thrown into this was the covid pandemic. Most of the years I looked at were right in the middle of the pandemic, so players weren't playing, and fans weren't allowed into the stands, so that just made evaluations much harder, and very not standard. Maybe in a few years if I do this exercise with the 2022-2025 classes as well, we could see vastly different results with maybe a noticable difference between the top and bottom performers. Also, another issue with the scouting teams like eliteprospects, scouts joined and left the team over the course of the years, so we don't know how much of an impact their voices were and how much that changed their scouting philosophy.

Data

I collected this data from a variety of sources. All of the points data came from eliteprospects, and I used that to create the draft pick value models. I could have just used Schucker's chart or something, but I wanted to make my own with my own parameters, and I think either way I probably would have had similar results. I then collected the rankings from every site I could. I pay for EP premium/rinkside so I was able to access their rankings easily. Most of the others were on the Athletic or TSN or THW, and I was able to get them from there. Smaht's, FC's, and dobber's were on their respective websites, and I didn't want to pay for McKeen's (though I do kind of want to now to look at their data and reports for a different project) so I just used the top 32 posted on EP, or occasionally one of their writers would post a top 50 on HockeyDB or reddit, in which case I used that if I could find it.